In the world of AI content creation, chatbot development, and prompt engineering, understanding how your text interacts with large language models (LLMs) is crucial. Whether you’re a beginner experimenting with GPT-4 for the first time or a developer integrating powerful AI models into your product, one challenge remains constant: estimating token usage accurately. That’s where LLM Token Counter shines — a free, browser-based tool that simplifies this essential task.

Why Token Counting Matters in 2026

When you interact with modern AI models like GPT-4, Claude, Gemini, or LLaMA, they don’t read words or characters the way humans do. Instead, text is broken down into tokens — the smallest chunks of meaning an AI processes. These tokens determine:

📌 Cost of API usage

📌 How much context the model can handle

📌 Whether your prompt will be fully understood

Each LLM has its unique way of tokenizing text, meaning the same sentence might consume a different number of tokens on different models. This makes a token counter not just useful — but essential for effective AI usage.

What Is LLM Token Counter? (Simple & Powerful)

LLM Token Counter (https://llmtokencounter.online/) is a free online platform that helps users instantly estimate tokens, words, and character counts for text before processing it with any large language model. Whether you’re working on prompts, designing chatbot dialogues, or optimizing API costs, this tool gives you accurate insights into how many tokens your input will consume.

✅ Instant Results — Paste your content and see token count immediately.

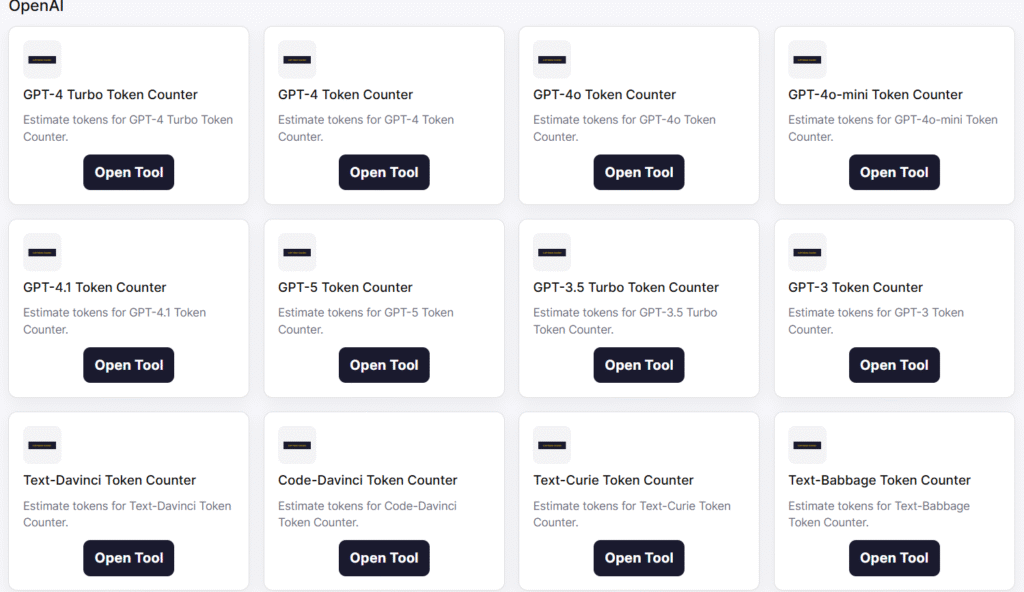

✅ Supports 60+ LLM Models — From GPT-4 and GPT-5 to Claude, LLaMA, Mistral, and more.

✅ Universal Token Estimator — For general planning when model details are unknown.

✅ Privacy-Friendly — Your text isn’t logged or stored.

Use Cases — Who Benefits Most?

✨ AI Developers: Build smarter apps with predictable costs and context management.

✍️ Content Creators: Estimate how long your AI-generated articles or scripts might be.

🧠 Prompt Engineers: Test and refine prompts for precision and efficiency.

📊 Businesses: Predict expenses before scaling AI workflows.

📚 Students & Researchers: Learn how tokens work and optimize experiments.

How Does It Work?

You simply paste your text into the tool’s interface. Behind the scenes, LLM Token Counter uses model-specific tokenization heuristics — closely approximating how real LLMs count tokens — to provide:

✔ Total token count

✔ Word count

✔ Character count

✔ Average characters per token

This allows you to plan costs, structure prompts, and prevent issues like context overflow or prompt truncation long before sending requests to an expensive AI API.

Final Thoughts: Make Every Token Count

In 2026, as AI becomes more deeply integrated into everyday digital tools, token management is no longer an advanced skill — it’s a basic requirement for responsible AI usage. LLM Token Counter empowers creators, engineers, and innovators with a clear understanding of how tokens work so that you never overspend, exceed model limits, or lose critical context in your requests.

💡 Try it today at https://llmtokencounter.online/ and make smarter, more efficient AI decisions with every prompt.